Executive Summary

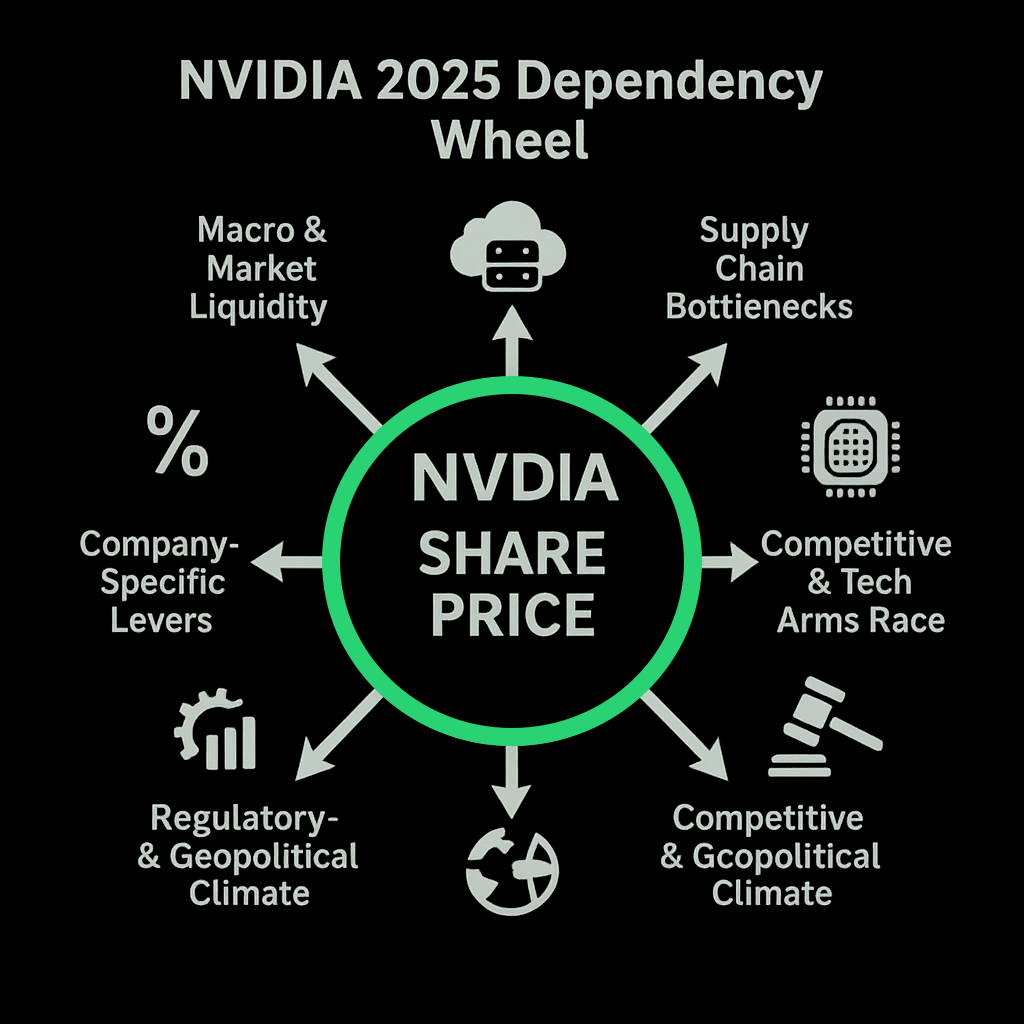

NVIDIA heads into mid-2025 as the market’s purest bet on AI compute, yet its valuation hangs on a complex mix of macro conditions, demand, supply, competition, regulation, and internal execution. Real yields near 2 percent and liquidity swings can add or subtract multiple turns from the stock’s price-to-earnings ratio, while hyperscaler capital-spending plans, still north of a quarter-trillion dollars have the power to nudge data-centre revenue materially with even small adjustments.

At the same time, the supply chain remains fragile: a single hiccup in TSMC’s CoWoS packaging line or a shortfall in high-bandwidth memory could erase a billion dollars of quarterly sales and dent margins. Competitive pressure is intensifying as AMD, cloud-built ASICs, and OpenAI’s new “Tigris” chip chase NVIDIA’s price-performance edge, and policy risk—from tighter U.S. export caps to any Taiwan-Strait flare-up can impose an overnight “war discount.”

Internally, gross margin is marching toward 74 percent and buy-backs are offsetting dilution, but stock-based compensation and wafer pricing still lurk as spoilers. Dark Stone Capital is running an above-benchmark stake over the next year while demand is locked in and supply bottlenecks, rather than orders, remain the critical swing factor. Looking three years out, we stay positive but no longer all-in: cloud self-silicon programs and China restrictions will steadily chip away at super-normal economics. Upside depends on cheaper memory, delays in cloud ASIC roll-outs, or a slide in real yields; downside lives in fresh export controls, cost-parity silicon from Google or Amazon, or a geopolitical shock. In short, NVIDIA is still the cleanest proxy on AI infrastructure, but alpha will come from agile position-sizing around supply headlines—not blind conviction.

Macro & Market Liquidity

The 10-year Treasury Inflation-Protected Securities (TIPS) yield still hovers near 2.1 percent; every additional half-point typically strips several turns from NVIDIA’s price-to-earnings (P/E) multiple. The U.S. Dollar Index (DXY) remains about four percent stronger versus the Taiwan dollar and Korean won, trimming reported revenue but cheapening foundry and memory inputs. Liquidity is the wild card: semiconductor exchange-traded fund SOXX has soaked up roughly eleven billion dollars year-to-date, while call-option volume sits near record highs—fuel for both melt-ups and sudden air-pockets.

Speculative note: a surprise Federal Reserve pivot that drives real yields lower could expand the multiple by two-to-three P/E turns; a five-billion-dollar exit from SOXX would likely yank the share price into a double-digit draw-down.

Secular Demand Drivers

Cloud hyperscalers are still the gale-force tail-wind. Microsoft budgets about eighty billion dollars for AI gear this year, Amazon around one-hundred-and-five billion, Alphabet roughly seventy-five billion. A one-percent swing in that pool moves NVIDIA’s data-centre revenue roughly three-quarters of a percent. Enterprise software is catching fire: vendors like Snowflake and ServiceNow now say more than forty percent of new bookings contain generative-AI modules. Gaming waits for the late-2025 “Ada-Next” card, crypto mining remains a rounding error post-Merge, and automotive silicon—think Mercedes Level-3 autonomy—is an out-year option, not a 2025 cash machine.

Speculative note: a five-percent cap-ex deferral by any hyperscaler could shave two percent off NVIDIA’s fiscal-2025 top line; a positive cap-ex surprise could trigger an “estimate-chase” rally.

Supply-Chain Bottlenecks

NVIDIA depends on Taiwan Semiconductor Manufacturing Company for wafers and on the scarce CoWoS-L advanced-packaging line. Management has reportedly locked up more than seventy percent of TSMC’s 2025 capacity. One clean-room hiccup crimps shipments immediately. High-Bandwidth Memory (HBM) is the other choke-point: SK hynix inventory is effectively sold out through 2025, with Micron and Samsung racing to add lines. A ten-percent HBM shortfall could idle seven percent of planned GPU output and shave a point off gross margin. Add an eighteen-percent jump in air-freight costs as cargo avoids the Red Sea, and every incremental cost dent shows up in earnings per share (EPS).

Speculative note: even a quarter-point miss in TSMC’s CoWoS yield could vaporise one billion dollars of quarterly revenue; a rapid easing of HBM tightness would let gross margin overshoot guidance.

Competitive & Technological Arms Race

Advanced Micro Devices’ Instinct MI325/355 accelerators already train Meta’s early Llama-4 model; Google’s sixth-generation Tensor Processing Unit (TPU v6) and Amazon’s Trainium 2 push internal silicon strategies. Start-ups such as Groq are posting eye-popping inference benchmarks, and OpenAI taped out a “Tigris” chip on TSMC’s 3-nanometre node. Each advance compresses NVIDIA’s price-performance edge unless the company widens its CUDA software moat.

Speculative note: if a cloud provider hits full cost-parity on its own chip, expect a rapid reset in NVIDIA’s average selling prices; should Tigris yields exceed sixty percent, four percent of 2026 sales could vanish before analyst models adjust.

Regulatory & Geopolitical Climate

The U.S. Bureau of Industry and Security’s May rule throttles the new B100’s performance density for China, threatening a region that once generated twenty-plus percent of data-centre revenue. The CHIPS and Science Act funnels six-point-six billion dollars to TSMC’s Arizona fab but bans similarly advanced nodes in mainland China, nudging long-run costs. Taiwan Strait tensions inflate cargo-insurance premiums—embedding a “war discount” into the multiple. Meanwhile, U.S. regulators have reportedly begun probing CUDA’s dominance; forced un-bundling would bleed margin over time.

Speculative note: a live-fire scare near Taiwan could widen that war-discount by two P/E turns overnight; even a preliminary antitrust complaint against CUDA would knock 300 basis-points off consensus margin assumptions.

Company-Specific Levers

Morningstar’s June report pegs trailing-twelve-month gross margin at 70.1 percent, above the five-year average of 66.4 percent and heading toward management’s 74 percent target. Every percentage point of margin moves EPS by roughly six percent. Research and development stands at only 9.9 percent of revenue, down from an 18.9 percent five-year average, highlighting operating leverage. Cash reached fifteen-point-two billion dollars at the April close; net margin sits at a hefty 51.7 percent. A fresh 25-billion-dollar buy-back offsets dilution, though option grants and restricted stock units (RSUs) still run in the mid-single digits of revenue.

Speculative note: if memory and substrate prices ease sooner than expected, gross margin could pierce 74 percent and surprise the market; a wafer-price spike or bulge in stock-based compensation would dent per-share growth just as fast.

How a Shock Becomes a Price Move

Imagine SK hynix missing HBM output by ten percent: GPUs sit idle, analysts slice six-billion dollars from data-centre revenue, gross margin falls on under-utilisation, consensus EPS drops eight percent, and traders yank a full P/E turn. History suggests a 15-to-17 percent price gap-down within forty-eight hours—mirroring the Q3 FY-22 supply scare.

Turning the Map into an Edge

Automate the data feeds—Federal Reserve Economic Data (FRED) for yields, TrendForce for CoWoS queues, BIS RSS for licence shifts—and convert each print to a twelve-month Z-score. Any high-beta node breaching ±2 sigma fires an alert. Pre-plan moves (“Trim twenty percent of my overweight if CoWoS tightens and EPS risk tops five percent”) so you act methodically rather than emotionally.

Bottom Line

NVIDIA trades like a high-delta call option on global AI compute. Its premium swells or deflates with every tremor in the six spokes above. Track those tremors early, map them to volume, margin and multiple, and you can move before the earnings release—or the price chart—tips off the herd.

Dark Stone Capital View on NVIDIA

Near-term (6-12 mo).

We’re holding a larger-than-index stake in NVIDIA, confident that 2025 demand is locked and TSMC’s packaging timetable is intact. The near-term swing factor is memory pricing, not orders. Tesla’s Optimus robot runs on Tesla silicon, so any NVIDIA uplift is limited to extra training demand until Dojo comes fully online.

Long-term (3-5 yr).

Still bullish, but no longer “all-in.” Cloud providers are rolling out their own chips, export rules cap China upside, and Tesla aims to replace third-party GPUs with Dojo. CUDA’s moat and the developer ecosystem justify a premium multiple, just not today’s extremes.

Upside sparks – cheaper high-bandwidth memory, delays in cloud ASIC programs, or real yields sliding below 1.5 percent.

Down-side landmines – tighter China export caps, proven cost-parity silicon from Google or Amazon, or a Taiwan Strait flashpoint.

Tactical plan – stay above benchmark weight while supply lines hum, but trim 15-20 percent if CoWoS or HBM lead-time gauges spike. Add on any supply-driven sell-off. Re-evaluate if hyperscalers shift real volume to in-house chips or if Dojo meaningfully replaces NVIDIA GPUs.

Sources

- Federal Reserve H.15 & H.4.1 releases (real yields and balance-sheet).

- Bloomberg Dollar Index & SOXX ETF flow trackers (year-to-date 2025).

- Company filings and earnings calls: Microsoft, Amazon, Alphabet, NVIDIA Q1 FY-25.

- TrendForce CoWoS capacity notes (February 2025).

- Earnings transcripts: SK hynix, Micron, Samsung (HBM supply).

- U.S. BIS Interim Final Rule on AI chips (May 2025).

- CHIPS Act award announcements (April 2025).

- Press and patent filings for AMD Instinct, Google TPU v6, Amazon Trainium 2, OpenAI “Tigris.”